At the 65th session of the UN Commission on the Status of Women that is currently underway (March 15-26, 2021), the focus is on evolving a global roadmap towards “achieving full equality in public life”. In the digital epoch, this includes enabling women’s effective participation online. Mainstream social media platforms today represent privately controlled public arenas that are re-configuring social interaction and political democracy. Viral sexism, misogyny, and gender-based violence – endemic to these platforms – pose a real threat, ruthlessly discriminating against and excluding women and people of non-normative genders.

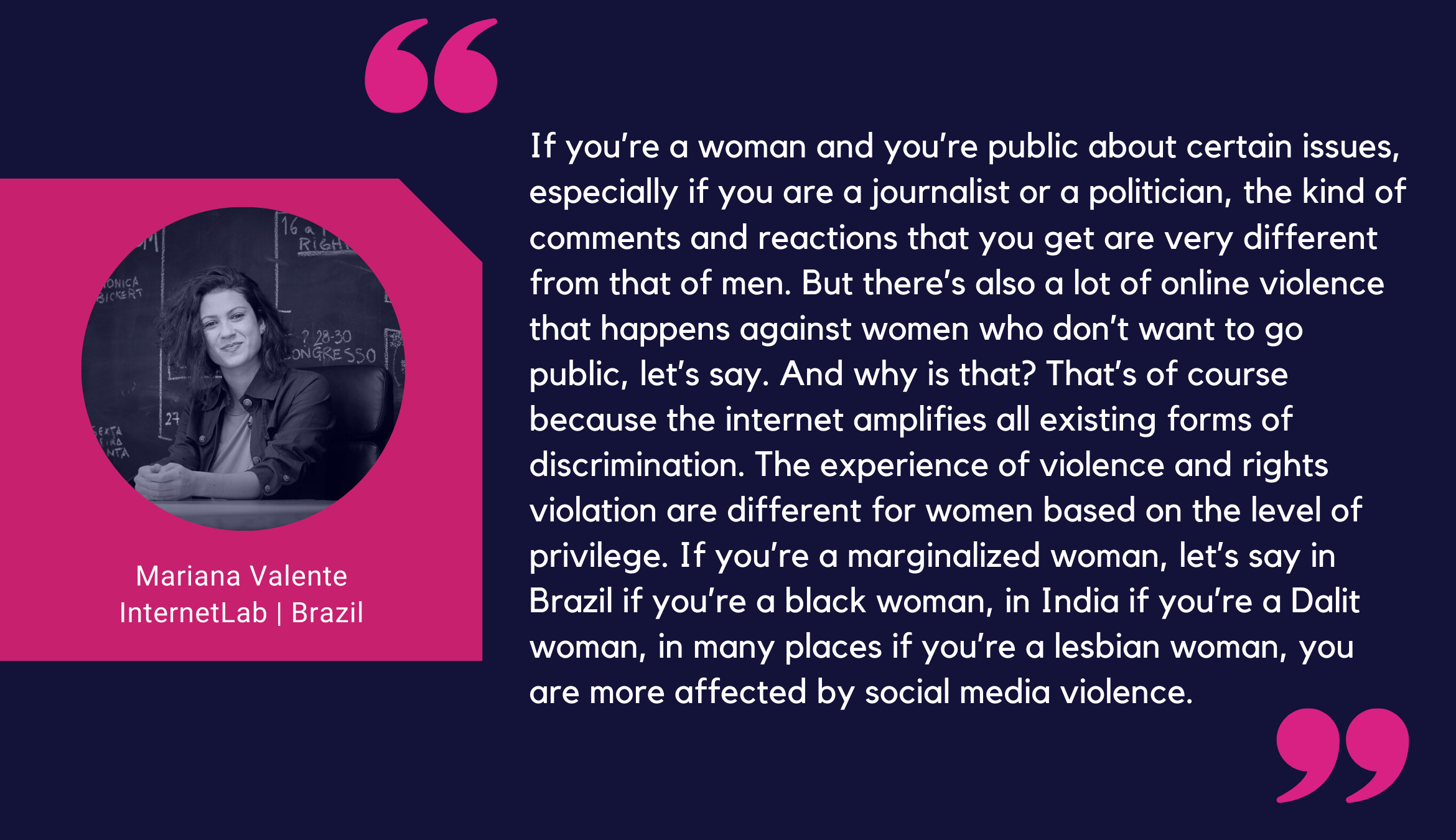

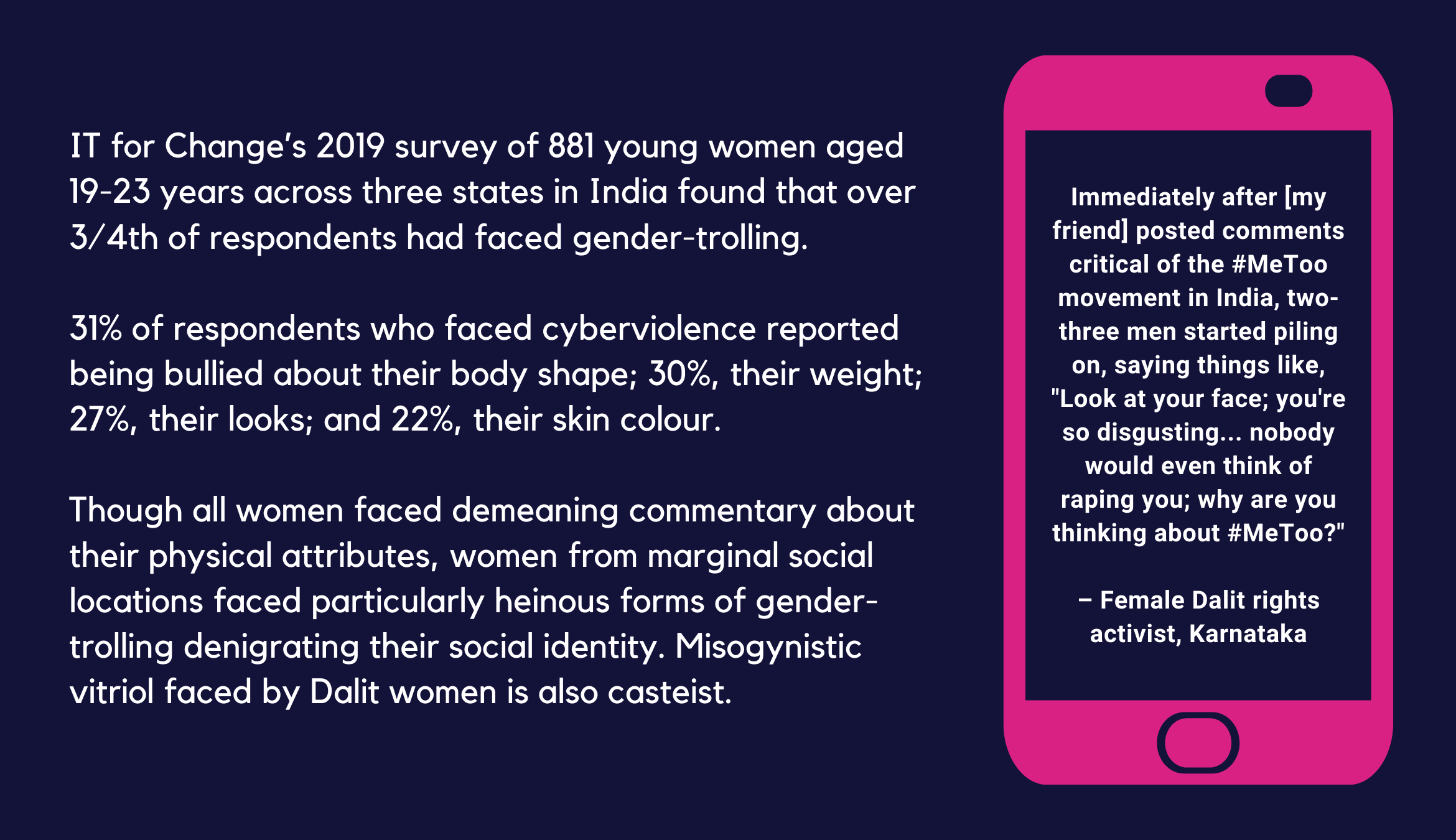

Misogyny may be universal, but it assumes a million different mutations based on the unique, contextual intersections of patriarchy with other social markers such as caste, class, race, and sexual orientation. Other socio-cultural locations also become axes of online oppression, with women in public-political life having to face virulent attacks.

🎙️ Listen to Mariana on the Bot Populi podcast. 🎙️

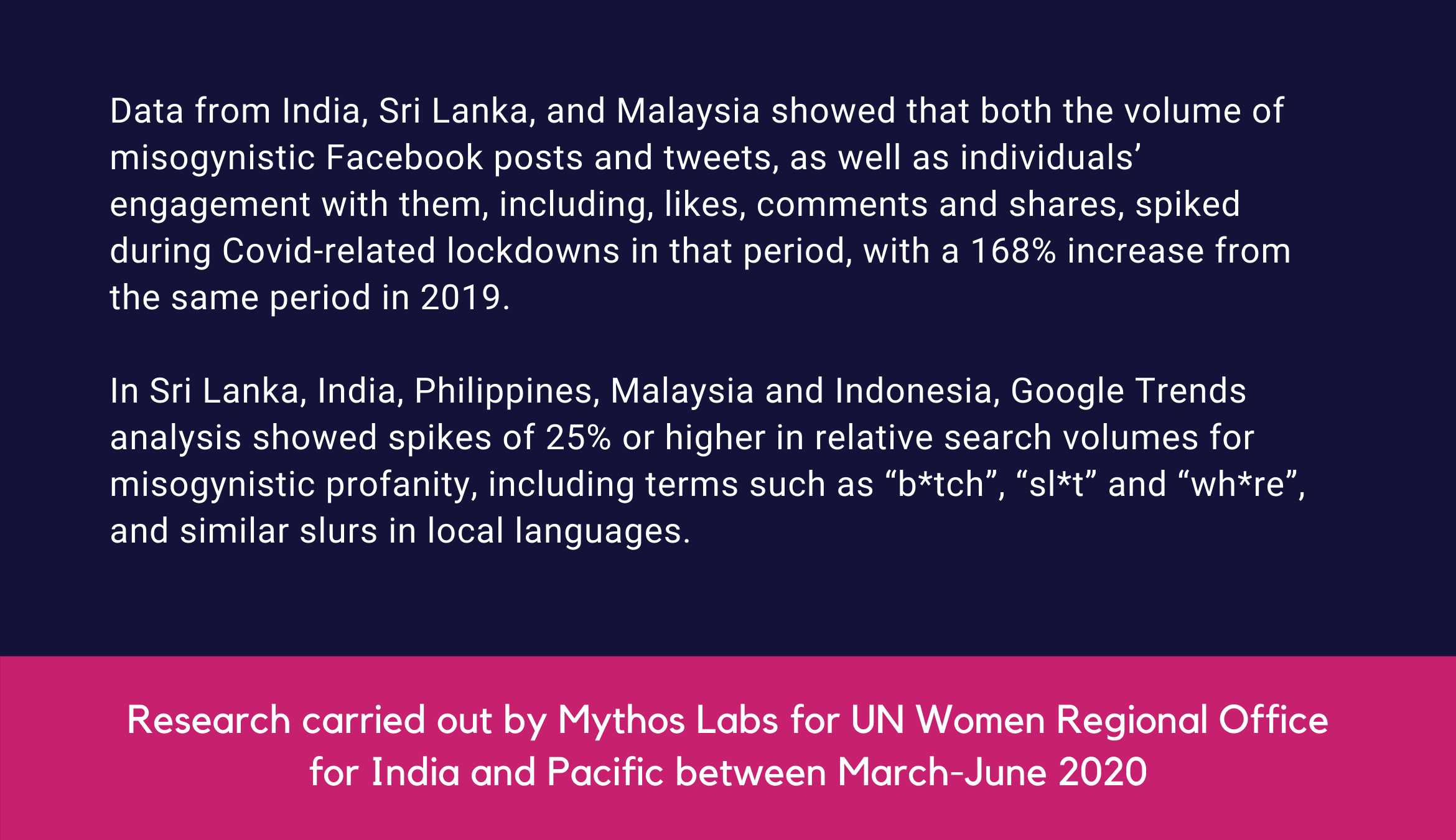

Covid-19 has made matters worse. In the pandemic-stricken world, women are “under siege from gender-based violence” in all spaces, and the internet-mediated public sphere is no exception.

Social distancing and quarantining measures that governments initiated during the pandemic have not only pushed women back into the private sphere but also triggered a rise in gender conservatism and the roll-back of women’s rights across the world. A wave of anti-feminism is taking over the internet.

Between May 5-11, 2020, during India’s national lockdown, IT for Change’s analysis of Google Trends revealed a major spike in online search queries from India for ‘fake feminism’, ‘pseudo feminism’, and ‘toxic feminism’.

In early 2021, we had a series of conversations with feminist activists from across the globe, in order to reflect on what it would take to reclaim the transformative potential of social media. Undeniably, social media today is a far cry from the liberated space for individuation, identity formation, and solidarity-building for individuals from marginal social locations that the early days of the internet revolution seemed to promise.

🎙️Listen to Naomi on the Bot Populi podcast.🎙️

🎙️Listen to Yiping on the Bot Populi podcast.🎙️

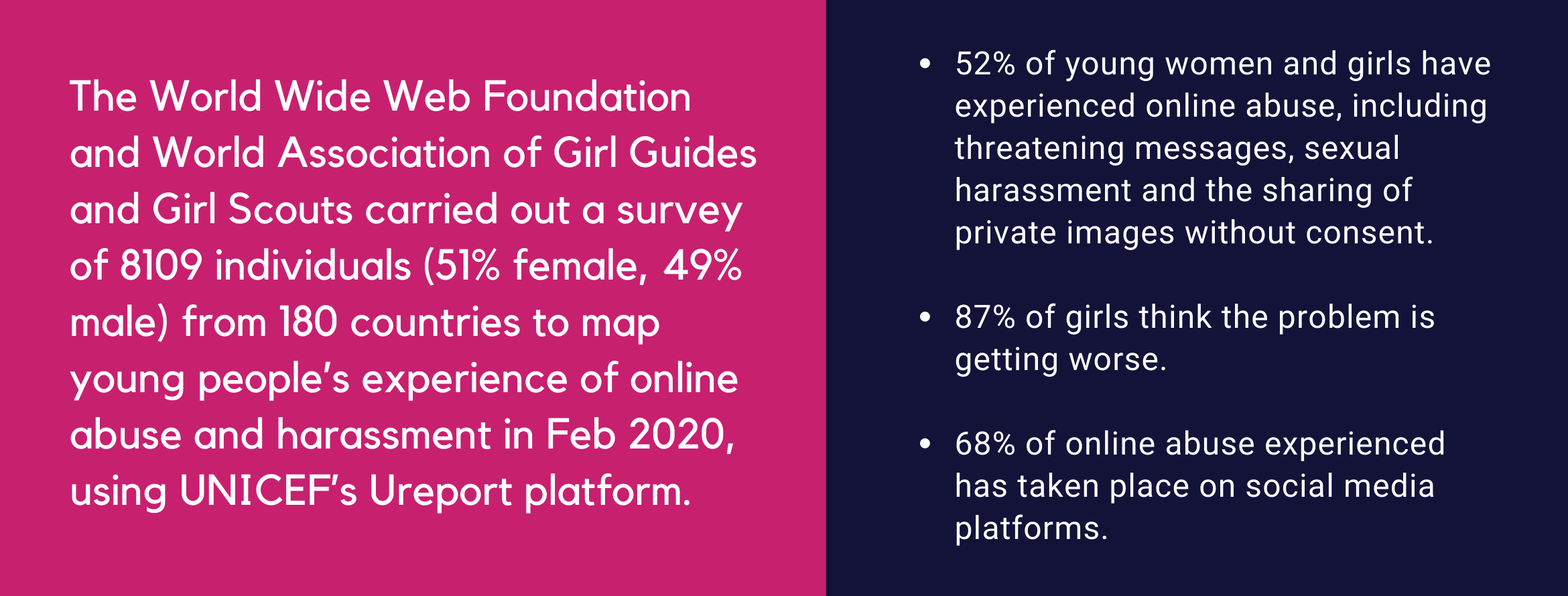

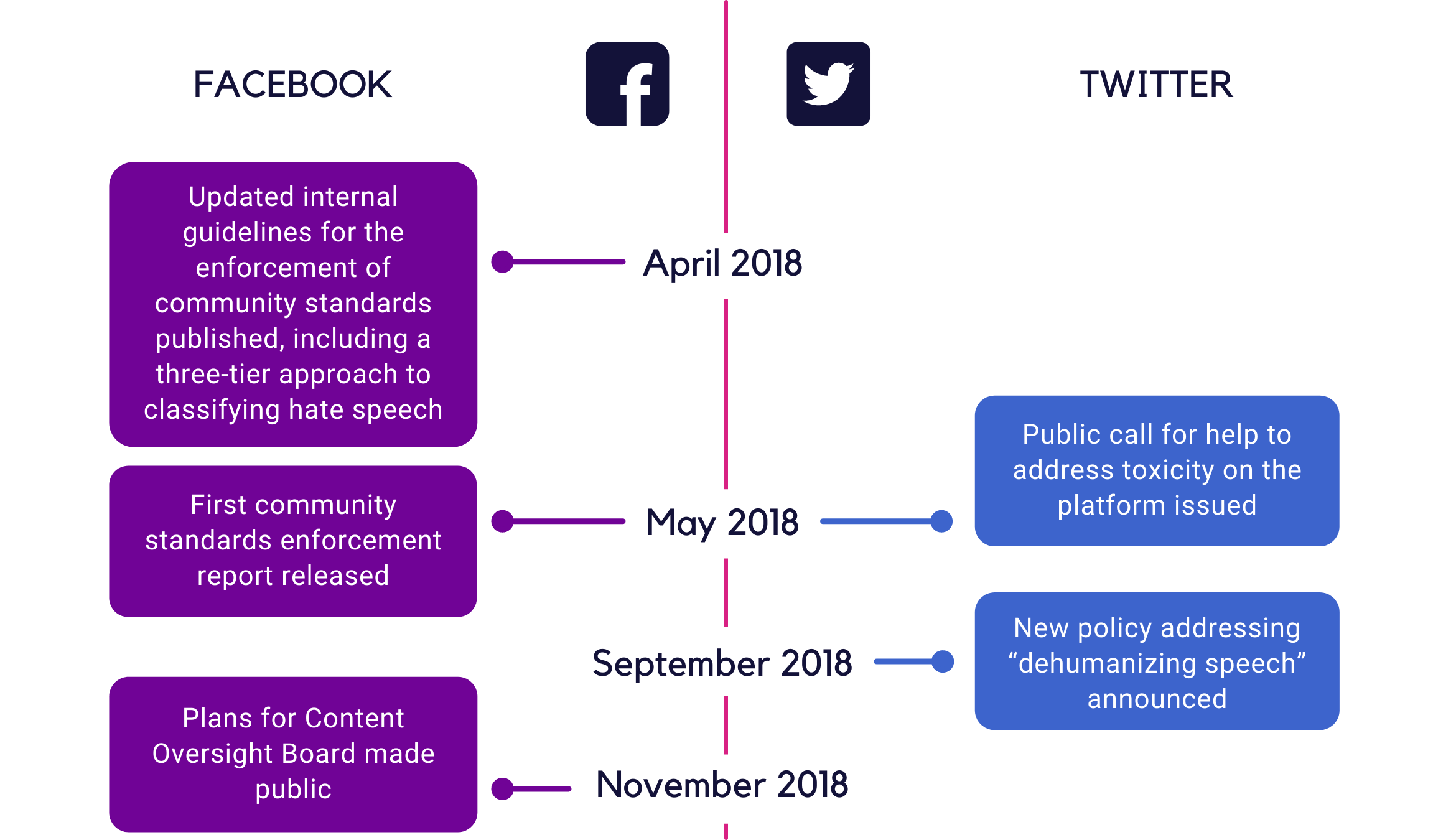

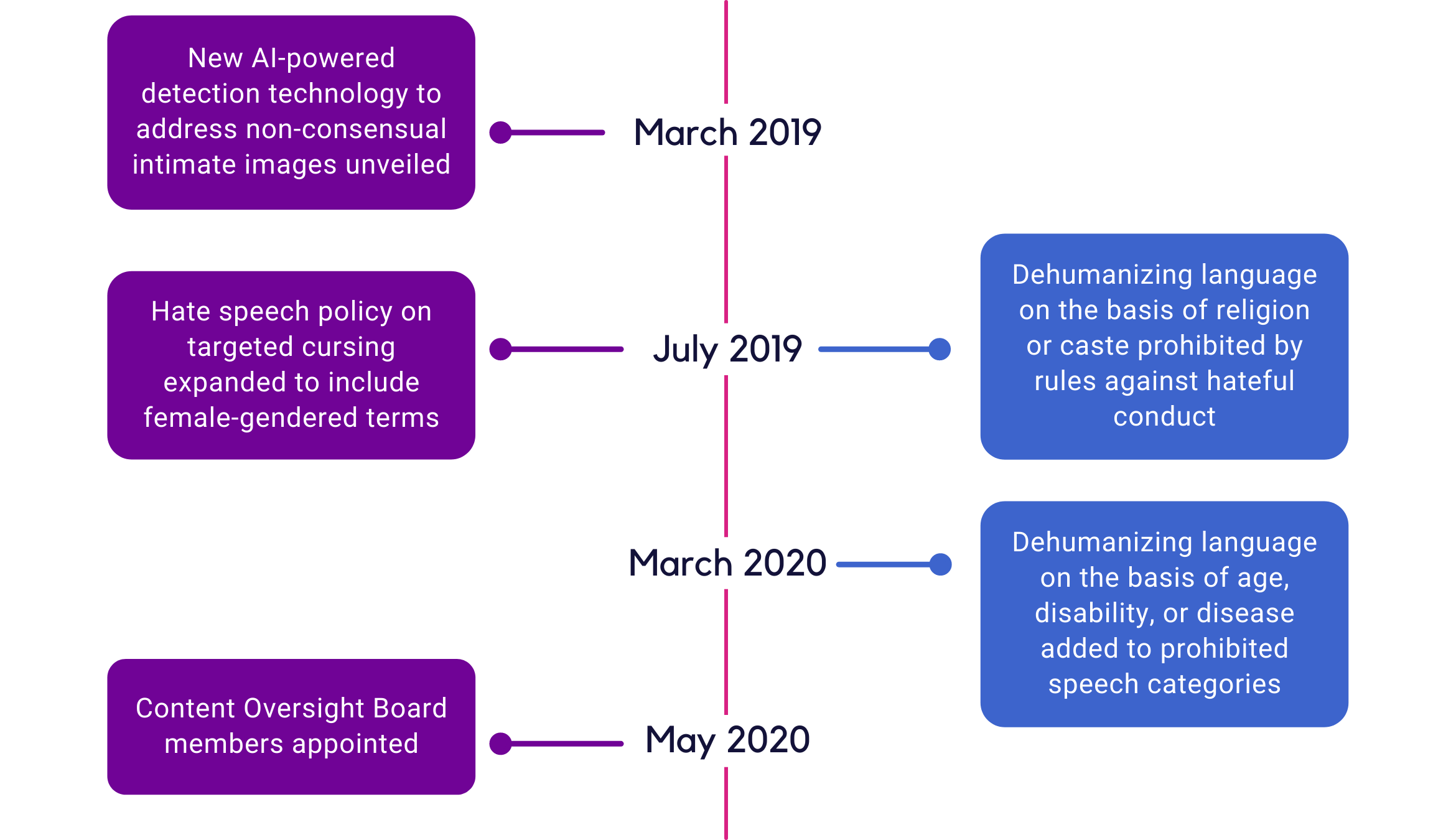

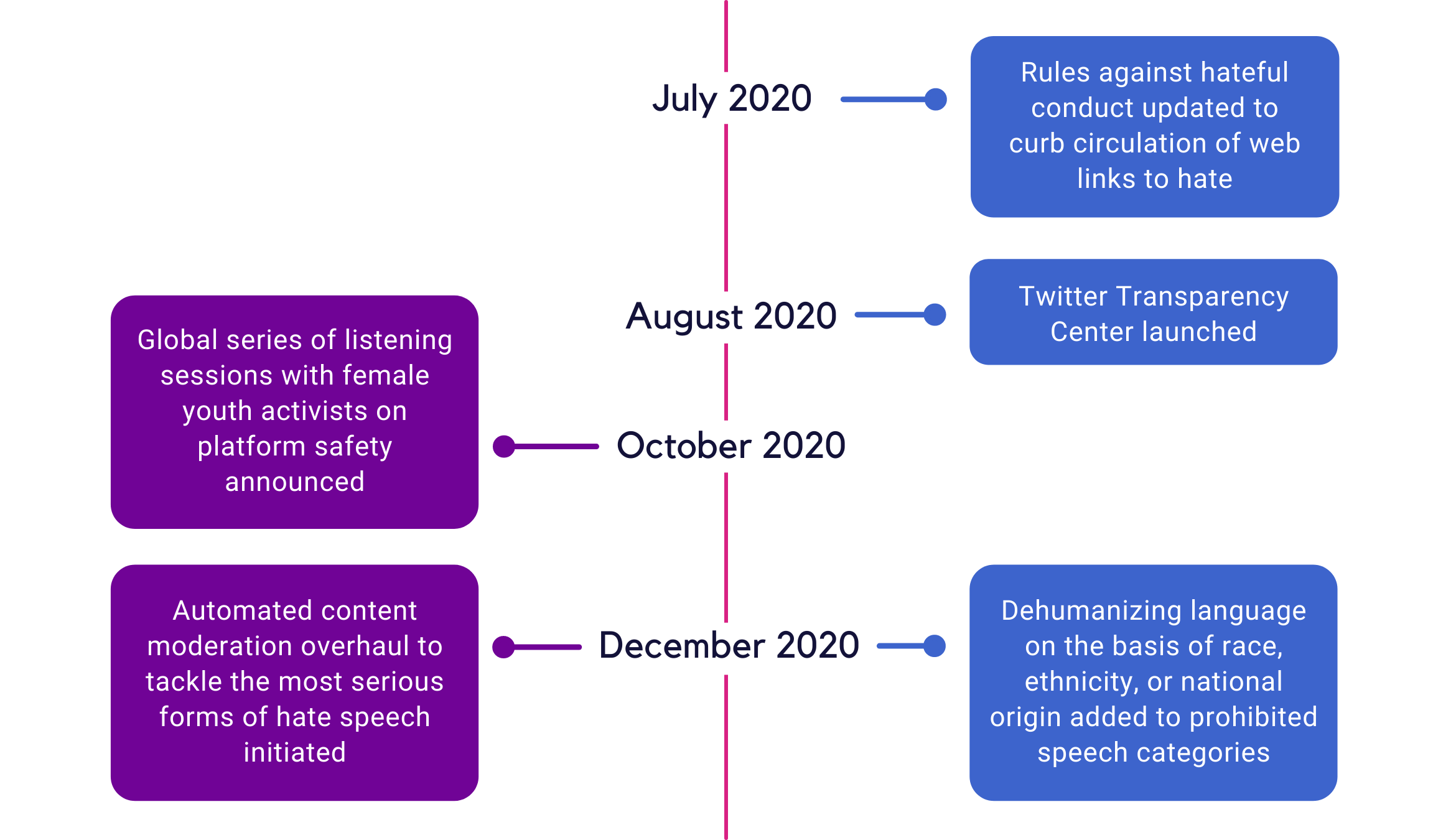

Social media companies themselves have had to acknowledge that something is badly broken in their business model, with world-wide repercussions for women’s human rights. Jack Dorsey, the CEO of Twitter, admitted that the platform’s interaction environment has created a “pretty terrible situation for women, and particularly women of color”. Similarly, Facebook executives have had to accept responsibility for the failure of the platform’s community standards in accounting for gender and social power differentials, leading to the over-censorship of counter-speech and the normalization of majoritarian hate. In the past couple of years, both companies have announced a slew of corrective measures to address the problem, in response to the tsunami of public criticism.

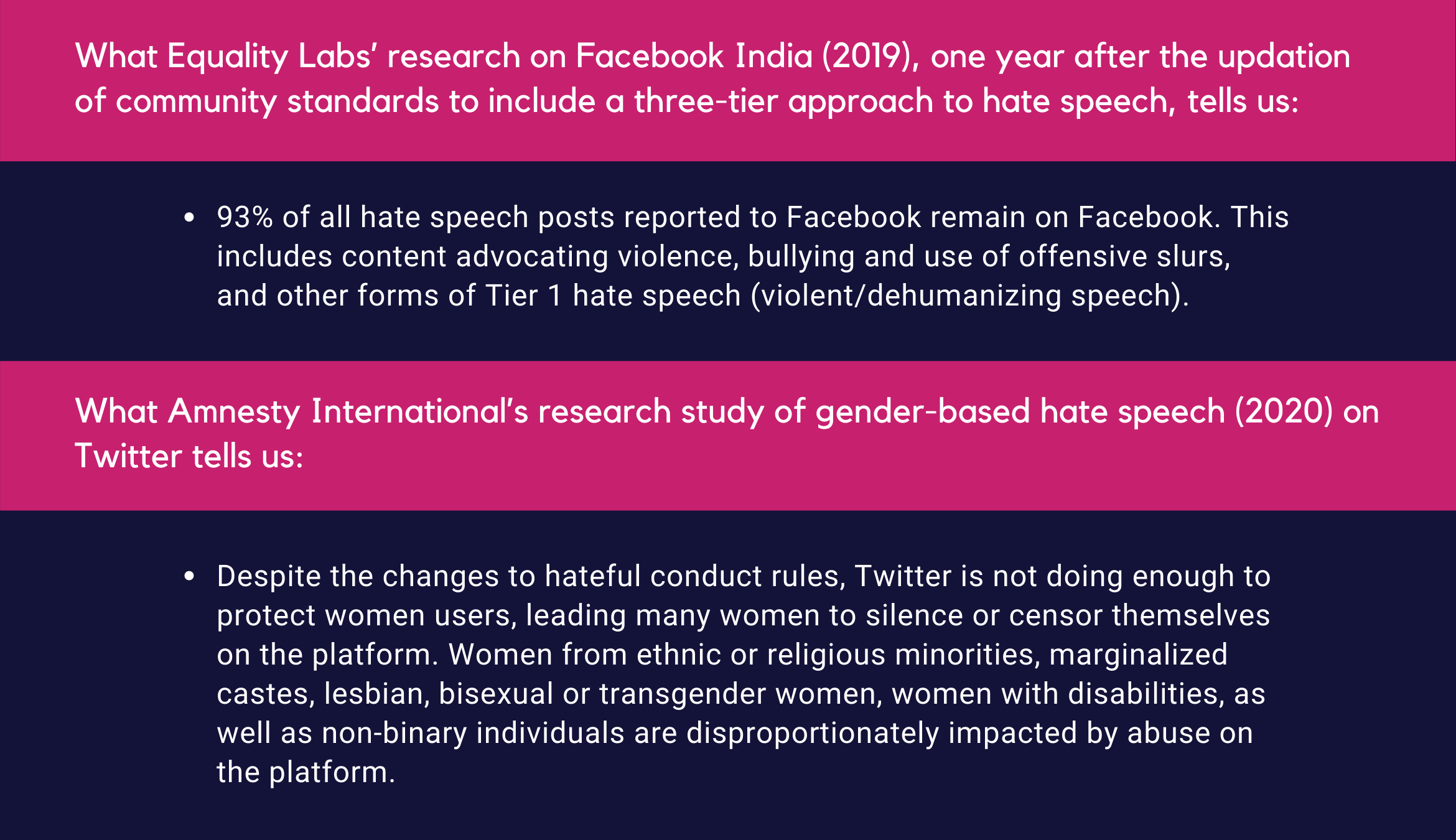

These shifts in content moderation policies and transparency reporting mechanisms, however, do not seem to have led to concrete improvements on the ground.

View the Equality Labs study here. View the Amnesty International study here.

Why have the content moderation efforts of social media companies – that rely on a combination of “flagging systems that depend on users or AI, and a judging system in which humans consult established policies” – failed so spectacularly? And why are they failing time and again? When it comes to addressing hate speech, one critical shortcoming is the persistence of a blinkered, siloed approach in evaluating attacks on protected characteristics of personal identity. A 2020 study by researchers Caitlin Ring Carlson and Hayley Rousselle on Facebook’s hate speech removal process found that, “while slurs that demeaned others based on their sexual orientation or their ethnicity were quickly and regularly removed, slurs that demeaned someone based on their gender were largely not removed when reported”.

🎙️ Listen to Erika on the Bot Populi podcast on April 5, 2021. 🎙️

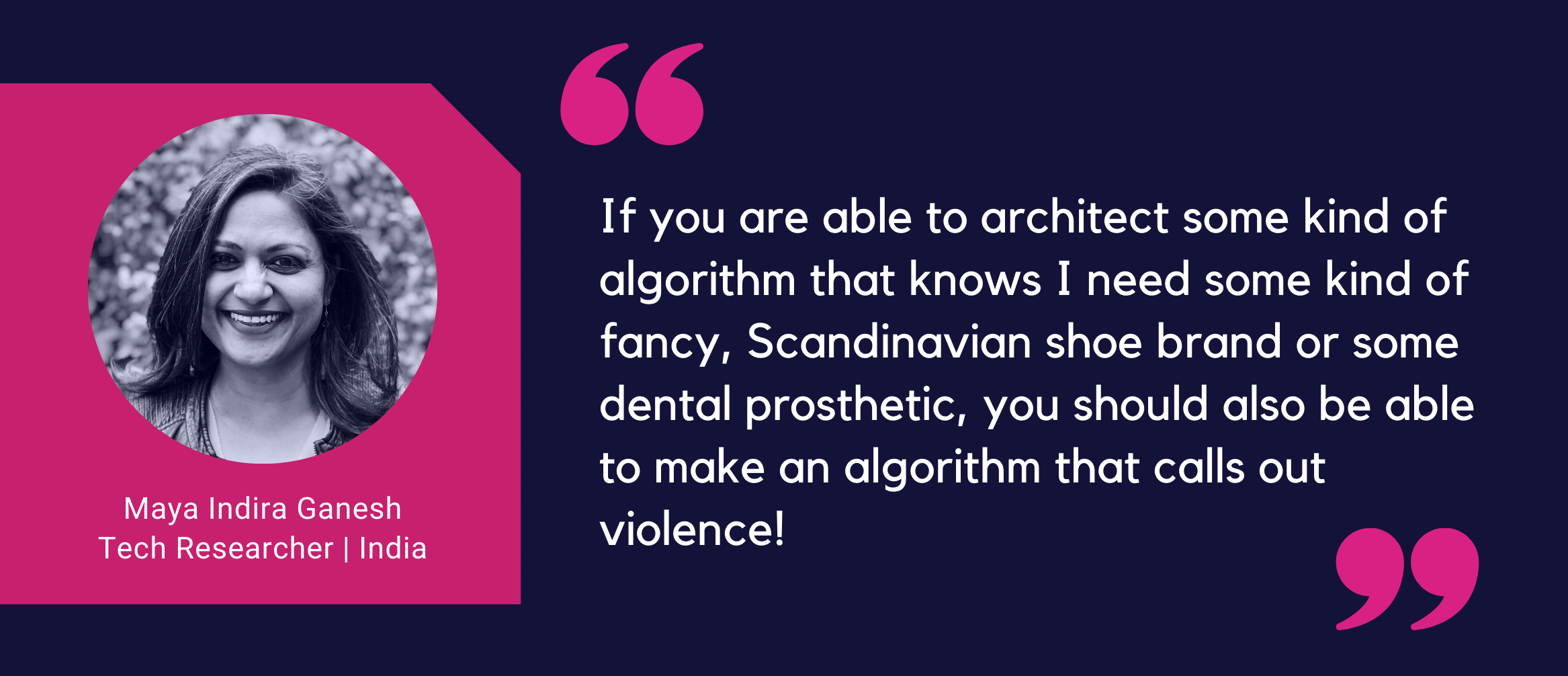

The slow pace of progress in improving automated flagging/detection of hateful content is another critical issue. Platforms tend to use the nascence of AI technologies, including natural language processing techniques, as an excuse, but more often than not it turns out to be a question of the lack of political will. Researcher Cathy O’Neil recently called out the abysmally low sums of money that platforms such as YouTube and Twitter devote to anti-harassment projects. Facebook’s ‘Responsible AI’ initiative has been criticized for focusing on peripheral problems such as bias in adverts rather than on core problems of tackling misinformation, hate, and polarizing content. Despite all the public claims that Facebook has made about improving its AI techniques for hate detection, its most accurate algorithm has achieved only 64.7% accuracy, far less than the 85% demonstrated by human moderators. Considering that during the pandemic, Facebook cut down on its teams of moderators and turned increasingly to algorithmic flagging, this is worrisome.

The inadequacies of content moderation systems when it comes to responding to user complaints of hate in local language content, especially in minority languages, has been repeatedly raised by activists from the Global South for over a decade. Platforms have not responded uniformly or consistently to this issue. There is oftentimes a direct correlation between platform responsiveness and market interests.

🎙️Listen to Chenai on the Bot Populi podcast.🎙️

It is not just user requests from the Global South that social media companies tend to ignore. Even law enforcement officials investigating complaints of gender-based cyberviolence find themselves in the same predicament, with very little room to even obtain the digital evidence they need to support investigations into complaints of gender-based violence online.

🎙️Listen to Sohini on the Bot Populi podcast.🎙️

Instead of fixing these deep-rooted structural problems with existing content governance arrangements, social media platforms have tended to unfortunately prioritize the periodic announcements of ‘grand fixes’ that capture the public imagination, even if their actual impacts are limited. Take the case of Facebook’s Content Oversight Board. The Board can can only rule on whether posts have been wrongly taken down, and not on ones that are allowed to remain, which means the inadequate takedowns of hateful content will not be addressed appropriately.

🎙️Listen to Marwa on the Bot Populi podcast.🎙️

For the emancipatory potential of social media to be reclaimed, it is evident that the solution cannot be left to platform self-regulation. Gaps in governance have resulted in the current chaos of social media platforms functioning as quasi-states exercising sovereignty “without the consent of the networked” (in the evocative formulation of Rebecca MacKinnon), in the algorithmified public sphere. We need a new global normative benchmarking exercise at the multilateral level to evolve common regulatory standards for content governance across social media platforms, so that the speech permitted on these spaces is within the boundaries/limits of free speech envisioned by the International Covenant on Civil and Political Rights, and other relevant treaties such as the Convention on the Elimination of All Forms of Racial Discrimination, and the Convention on the Elimination of All Forms of Discrimination Against Women. This may not be a simple task, but the loss of the democratic integrity of the public sphere and the disproportionate gendered costs of private governance of social media exhort urgent global action.

Even as we struggle to re-establish the rule of law in the digital wild west of global social media platforms, we need to keep up the quest for alternative feminist communication infrastructures.

🎙️Listen to Ledys on the Bot Populi podcast.🎙️

The podcast series Feminist Digital Futures has been produced as part of the Feminist Digital Justice project, a joint policy research and advocacy initiative of IT for Change and DAWN (Development Alternatives with Women for a New Era). The series is co-supported by the World Wide Web Foundation.

Listen on SoundCloud, Spotify, or Google Podcasts now.

Cover artwork by Harmeet Rahal